Learning from Physical Human Feedback: An Object-Centric One-Shot Adaptation Method

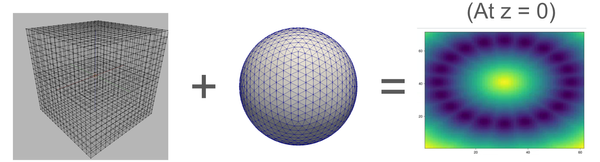

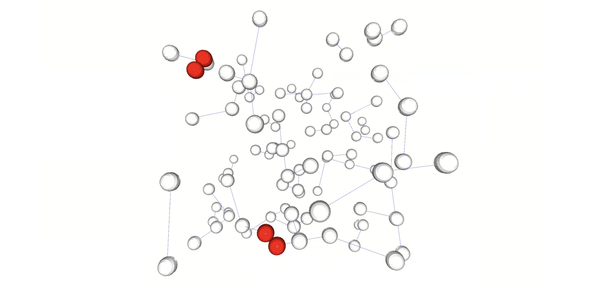

The paper introduces our innovative approach in robotics, Object Preference Adaptation (OPA), which focuses on adapting robot behavior based on physical human feedback. This method allows robots to understand and adjust to human preferences in real-time by interpreting human interventions to the robot related to specific objects. Our insight is that most human preferences can be attributed to object in the environment.

Paper Website

https://alvinosaur.github.io/AboutMe/projects/opa/

Adaptating the policy

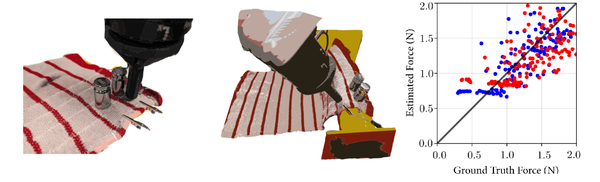

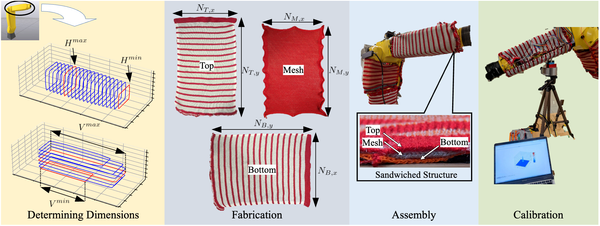

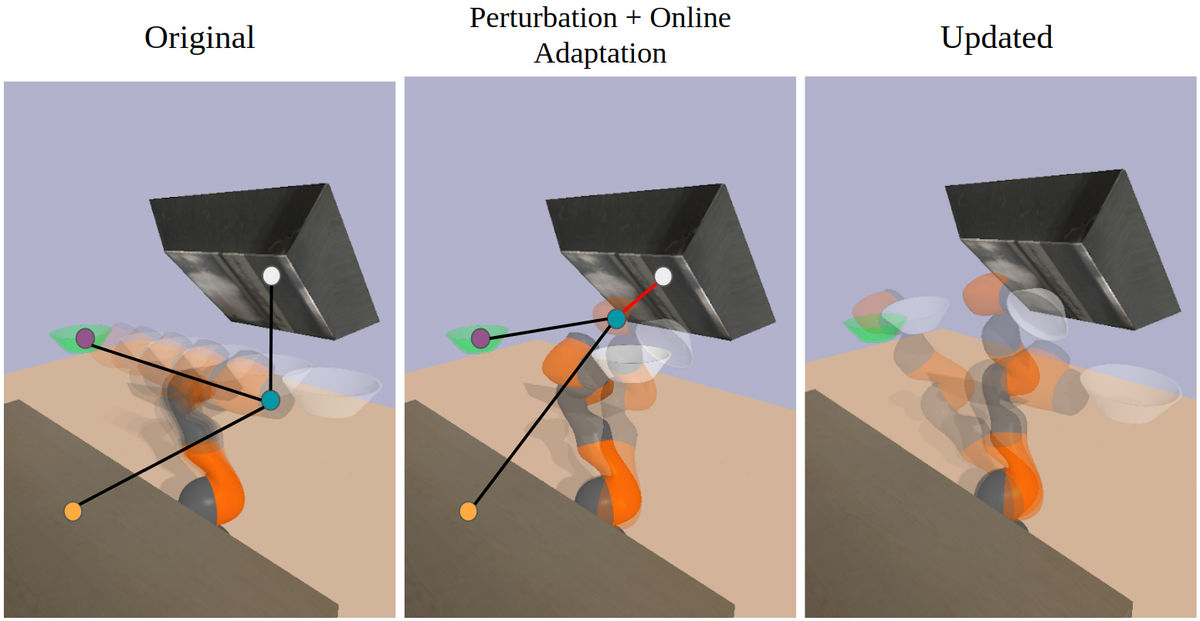

OPA operates by initially training a base policy to generate a range of behaviors. It then updates this policy online in response to human feedback. Notably, this adaptation requires only a single intervention from a human, enabling the robot to produce new behaviors that were not part of its initial training. This process leverages synthetic data, offering a cost-effective alternative to extensive human demonstrations.

Why this work may be useful

OPA significantly reduces the amount of training data required for teaching robots. By utilizing synthetic data and learning directly from a single human intervention, OPA circumvents the need for extensive, costly datasets typically used in robotic training by focusing on relationships between the human and the environment objects. This efficient use of data not only speeds up the training process but also makes it more generalizable accross users.

Why this work may not be useful

The effectiveness of the OPA method might be limited as it primarily attributes human preferences to object positions, potentially overlooking other critical factors such as object color, texture, or contextual environment and task. This narrow focus might not fully capture the complexity of human preferences, leading to a less comprehensive understanding and adaptability in real-world scenarios where such nuances play a significant role.

Verdict: Useful for a restricted set of Human-Robot interaction settings/Collaboration scenarios